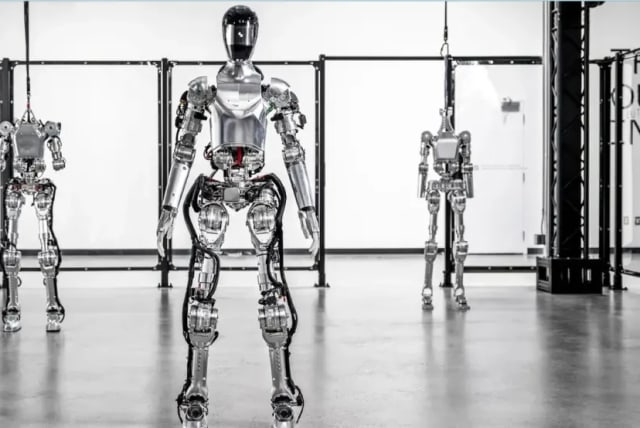

New robot called Figure 01 can speak and move like a human

Robot Figure 01 learns to make coffee via video with OpenAI. Advances in mechanics and language, but skepticism raised by Livescience.com.

A human-shaped robot with the ability to learn from its mistakes has successfully managed to learn how to make a cup of coffee just by watching a video of a person doing so. This new robot, called Figure 01, can serve you food, and answer questions in a friendly voice using a technology called OpenAI.

The OpenAI technology is mostly known for the product ChatGPT. While it is unlikely that this invention uses ChatGPT due to its use of pause words such as “um,” the possibility still exists. In an article about this robot, Livescience.com argues that if everything that the company’s video shows works “it means an advancement in two key areas of robotics.”

With OpenAI, Figure 01 can now have full conversations with people-OpenAI models provide high-level visual and language intelligence-Figure neural networks deliver fast, low-level, dexterous robot actionsEverything in this video is a neural network: pic.twitter.com/OJzMjCv443

— Figure (@Figure_robot) March 13, 2024

The first area that Livescience.com refers to is the mechanical aspect of robotics. This robot’s human-like movement capabilities showcase an advancement in the ability to build mechanical constructs with “dexterous, self-correcting movement.” This means that modern engineers can imitate the human hand, including the smaller bones, to a better level. This also means that the finer motors, allow the robot to carry out tasks and hold objects delicately.

The second advancement that Livescience.com talks about in their article relates to natural language processing (NPL.) According to the article, OpenAI’s engine is immediate and responsive to queries, as well as able to translate data into speech. However, Livescience.com argues that the video might be prerecorded.

Live science’s skepticism

In the article, Livescience.com suggests that the video showcases “what Figure Robotics is working on rather than a live field test.” A series of occasions where the robot’s speech imitates natural human speech too well - such as at 0.52s and 1.49s according to the article - leads Livescience.com to question an AI’s ability to “include such random, humanlike tics of diction.”

From the article, it seems like Livescience.com’s skepticism stems from Figure 01’s ability to create the “unconscious cadence humans use in speech.” Overall, Livescience.com concludes that if the video is a live field test, then Figure 01 is a “giant leap towards the future.”

Jerusalem Post Store

`; document.getElementById("linkPremium").innerHTML = cont; var divWithLink = document.getElementById("premium-link"); if (divWithLink !== null && divWithLink !== 'undefined') { divWithLink.style.border = "solid 1px #cb0f3e"; divWithLink.style.textAlign = "center"; divWithLink.style.marginBottom = "15px"; divWithLink.style.marginTop = "15px"; divWithLink.style.width = "100%"; divWithLink.style.backgroundColor = "#122952"; divWithLink.style.color = "#ffffff"; divWithLink.style.lineHeight = "1.5"; } } (function (v, i) { });